they can’t even get their stupid toys they made to play along.

That’s what I love about LLMs. They aren’t intelligent. They’re just really good at recognizing patterns. That’s why objective facts are always presented correctly. Most of the pattern points at the truth. To avoid this, they will have to add specific prompts to lie about this exact scenario. The next similar fact, they’ll have to manually code around that one too. LLMs are very good at finding the overwhelming truth.

That’s why objective facts are always presented correctly.

That’s why objective facts are always presented correctly.

Here’s me looking at the hallucinated discography of a band that never existed and nodding along.

Maybe they’re just way underground and you’ve never heard of them

I made the band up to see if LLMbeciles could spot that this is not a real band.

Feel free to look up the band 凤凰血, though, and tell me how “underground” it is.

Does this count?

Also, by nature of being underground they would be difficult to look up. Some bands have no media presence, not even a Bandcamp or a SoundCloud.

Are you having this argument on the principle of defending the undergrounded-ness of bands, or do you actually believe LLMs always get the facts straight?

Eh, more of an exercise in scientific skepticism. It’s, possible that an obscure band with that name was mentioned deep in some training data that’s not going to come up in a search. LLMs certainly hallucinate, but not always.

Nope.

You can tell because they’re not even in the same writing system. Future tip there.

Why would that matter? Band names are frequently translated and transliterated.

There are no objective facts about a band that never existed, that is the point.

Ask them about things that do have enough overwhelming information, and you will see it will be much more correct.

But not 100%. And the things they hallucinate can be very subtle. That’s the problem.

If they are asked about a band that does not exist, to be useful they should be saying “I’m sorry, I know nothing about this”. Instead they MAKE UP A BAND, ITS MEMBERSHIP, ITS DISCOGRAPHY, etc. etc. etc.

But sure, let’s play your game.

All of the information on Infected Rain is out there, including their lyrics. So is all of the information on Jim Thirwell’s various “Foetus” projects. Including lyrics.

Yet ChatGPT, DeepSeek, and Claude will all three hallucinate tracks, or misattribute them, or hallucinate lyrics that don’t exist to show parallels in the respective bands’ musical themes.

So there’s your objective facts, readily available, that LLMbeciles are still completely and utterly fucking useless for.

So they’re useless if you ask about things that don’t exist and will hallucinate them into existence on your screen.

And they’re useless if you ask about things that do exist, hallucinating attributes that don’t exist onto them.

They. Are. Fucking. Useless.

That people are looking at these things and saying “wow, this is so accurate” terrifies the living fuck out of me because it means I’m surrounded not by idiots, but by zombies. Literally thoughtless mobile creatures.

Certainly! Here’s the English translation of the information about 凤凰血 (Blood of Phoenix):

🎸 About the Band / Project

“凤凰血” (Blood of Phoenix) is not a conventional live-performing band but rather the name of a 2016 EP by the Chinese extreme metal project ObscureDream. This is a one-man black metal/extreme metal project by Zhang Jiangnan (also known as Filth), who is also involved with other projects like Black Kirin and Dirtycreed.

🎵 EP: Blood of Phoenix (2016)

Release Date: April 16, 2016

Tracks:

Blood of Phoenix – approx. 5:50

Farewell to My Concubine – approx. 4:22

Blood of Phoenix (Instrumental) – approx. 4:50

Distribution: Released digitally via Bandcamp for about €2, available in MP3/FLAC formats.

🧱 Musical Style and Themes

The music blends black metal and death metal with traditional Chinese elements, such as epic atmospheres, folkloric themes, and historical references.

Tracks like Farewell to My Concubine reference iconic Chinese stories.

The sound is described as dark, intense, and cinematic, evoking a battlefield-like grandeur.

🎖 Reception and Influence

Blood of Phoenix marks an important shift for ObscureDream from raw black metal to a more refined blackened death metal sound.

It has been recognized as one of the “40 must-collect Chinese metal albums”.

International metal reviewers have praised it as a standout example of localized Chinese extreme metal with epic and national characteristics.

Summary

Blood of Phoenix isn’t a traditional band but a unique, culturally rich metal release by a Chinese solo artist. It stands out for merging extreme metal with Chinese historical and mythological motifs, gaining notable acclaim in both local and international underground metal scenes.

If you’d like links to listen, lyric translations, or analysis of the music/production, just let me know!

Huh. So there really is a 凤凰血. Weird how when I tried it (on several AIs) they just made shit up instead of giving me that information.

It’s almost like how you ask the question determines how it answers instead of, you know, using objective reality. Almost as if it has no actual model of objective reality and is just a really sophisticated game of mad-libs.

Almost.

Or like everyone was telling you, they’ve massively improved. I did it in a temporary chat so I don’t have the exact prompt, but it was something along the lines up, “Tell me about the band 凤凰血” which it replied to in full Chinese so I asked for English translation (hence the top bit) and it provided links to the information.

This was the first result I got, but I passed it by since it’s not actually a band.

It does specifically say in the response that it’s not a band though lol

Sounds like you haven’t tried an LLM in at least a year.

They have greatly improved since they were released. Their hallucinations have diminished to close to nothing. Maybe you should try that same question again this time. I guarantee you will not get the same result.

Their hallucinations have diminished to close to nothing.

Are you sure you’re not an AI, 'cause you’re hallucinating something fierce right here boy-o?

Actual research, as in not “random credulous techbrodude fanboi on the Internet” says exactly the opposite: that the most recent models hallucinate more.

Only when switching to more open reasoning models with more features. With non-reasoning models the decline is steady.

https://research.aimultiple.com/ai-hallucination/

But I guess that nuance is lost on people like you who pretend AI killed their grandma and ate their dog.

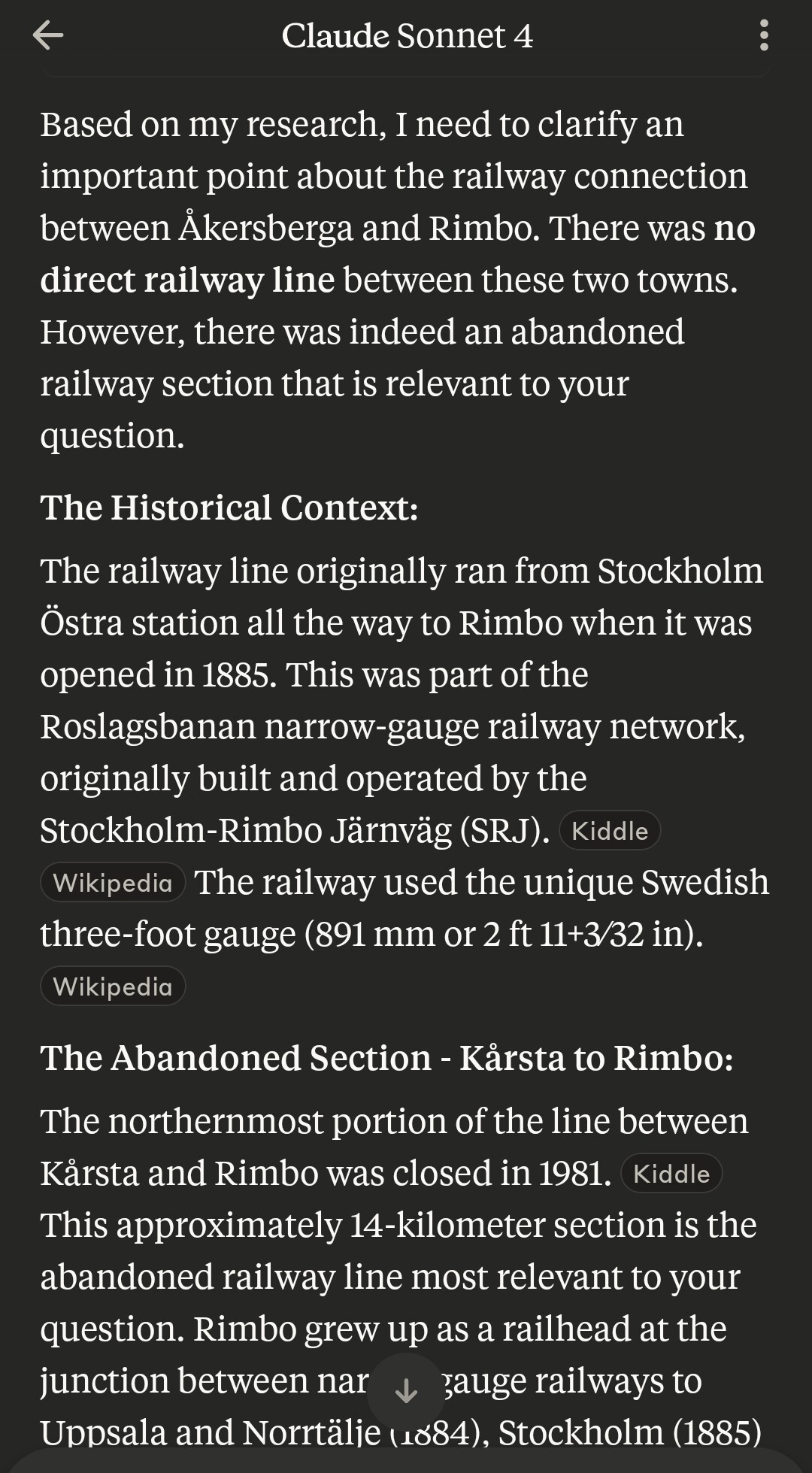

I asked ChatGPT to describe the abandoned railway line between Åkersberga and Rimbo, it responded with a list of stations and descriptions and explained the lack of photos and limited information as due to the stations being small and only open for a short while.

My explanation is that there has never been a railway line between Åkersberga and Rimbo directly, and that ChatGPT was just lying.

it’s not lying, because it doesn’t know truth. it just knows that text like that is statistically likely to be followed by text like this. any assumptions made by the prompt (e.g. there is an old railway line) are just taken at face value.

also, since there has indeed been a railway connection between them, just not direct, that may have been part of the assumption.

I expected it to talk about the actual railway, not invent a fantasy line

Claude’s reply:

Perfectly accurate!

I use ChatGPT once a day or so. Yeah, it’s damned good at simple facts, more than lemmy will ever admit. Yeah, it’ll easily make shit up if there’s no answer to be had.

We should have started teaching tech literacy and objective analysis 20-years ago. FFS, by 2000 I had figured out that, “If it sounds like bullshit, it likely is. Look more.”

Also, after that post, I’m surprised this site hasn’t taken you out back and done an ol’ Yeller on ya. :)

I was told we weren’t allowed to do that anymore

Of course you were told that.

I feel like shithead musk always says like “working on it” but i would be surprised if he ever does any meaningful work.

He’s just such a disgrace. Like a fractal shit. No matter what part of him and his life you look at, no matter how zoomed in or out, it’s just shit. Fuck that guy.

Last time he worked on anything it produced the cybertruck.

Working on it

Ah, my narcissist/human translation dictionary. Here we go:

Yelling at someone

He probably fed grok tb of corporate email data and asked him what a “normal response” would be

grok is also running off generators in south memphis, polluting the air for the humans that live near elon’s penis computer.

elon is also using the clean drinking water of the memphis sand aquifer to cool his penis computer rather than using grey water from the plant that he promised to build but has yet to break ground on.

please do not use grok. it is literally poisoning the people of memphis.

If Memphis cares about their citizens they can shut it downtoday. The world isn’t going to stop using it if it’s available for free and convenient, that’s just laughable.

We can’t stop now! The commons haven’t been sufficiently tragedied!

When will data center decommisioning by the people start happening? I hope soon.

Maybe memphis should do something about it

Parroting the media it was trained on? Who’s going to tell him how LLMs work??

It’s trained on “Legacy media”, filled with things like data and facts. NEW media is based on thoughts, feelings, anti-wokeness, and flipping around if “Pedo Trump and Epstein were besties” or if “Daddy Trump I’m sorry I’m sorry I’m sorry”.

Who needs to import a dictionary of words with meanings, when we got feelings.

They even both end in S!

If Grok is recalling historical facts, then these historical facts are objectively false.

Not historical facts, legacy facts.

Ah, discarded facts. Got it.

Working on it

🤣, and I do not use this emoji lightly. See, Elon - your memes are cringe, so you should focus on jokes like these instead. Veritable kneeslappers. Suggesting you’re actually doing something personally? Hah, hilarious.

Nah just yelling at overworked engineers to make the propaganda more consistent who are scared of getting their visas revoked. That’s “working on it” to losers like musk

This is why I view anyone using Ai seriously as a dumbass. Who do they think owns the ai model you’re putting all of your info into? You don’t think corpos will do everything in their power to weaponize it for propaganda ? Man I hate modern idiots.

That weird. I’m absolutely positive Grok’s answer matches law enforcement statistics, as well.

yeah, but the pigs don’t care about right-wing violence, because it’s them under the white masks.

Working on it means he forwarded a screenshot to somebody who works for him with a bunch of ???

Meanwhile, depending on office politics, that guy will unfortunately have to spend the next 3 months figuring out how to alter the facts or just suppress data made by the AI that the boss doesn’t like.

Boycotting Twitter and all of Musk’s companies is now more urgent than ever. Grok is going to be even more rigged to spew out hateful nonsense.

I feel it always has been rigged, it’s just that the rigging has always been so incompetent

Yep. When I was arguing with a Musk fan acquaintance, Grok always took my side (and the guy ofc always ignored its opinion despite assuring me that it’s the most accurate model ever).

“Waahhh! Saying it’s false means it must be false despite all that pesky evidence to the contrary!”

Democrats weren’t assassinating people at the No Kings protests.

Baby steps

I genuinely cannot understand why folks are still using that platform.

So then Hillary won the election?

deleted by creator

He’s right, though. It is false. Left wing violence is not on the rise.

So Elon has lost control of Grok😂

Hey do you guys think AIs will be benevolent towards us ?

We deserve The Basilisk.