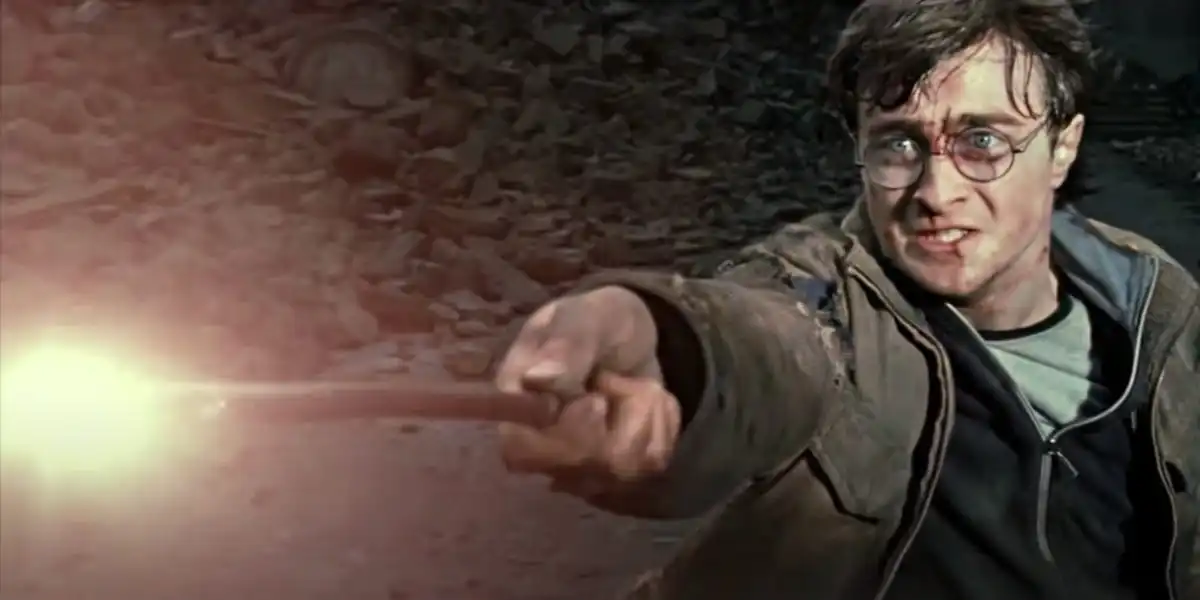

OpenAI now tries to hide that ChatGPT was trained on copyrighted books, including J.K. Rowling’s Harry Potter series::A new research paper laid out ways in which AI developers should try and avoid showing LLMs have been trained on copyrighted material.

Our ancient legal system trying to lend itself to “protecting authors” is fucking absurd. AI is the future. Are we really going to let everyone take a shot suing these guys over this crap? Its a useful program and infrastructure for everyone.

Holding technology back for antiquated copyright law is downright absurd.

Edit: I want to add that I’m not suggesting copyright should be a free for all on your books or hard work, but rather that this is a computer program and a major breakthrough, and in the same way that if I read a book no one sues my brain for consumption I don’t think we should sue an AI: it is not reproducing books. In the same manner that many footnotes websites about books do not reproduce a book by summarizing their content. With the contingency that until Open AI does not have an event where their reputation has to be re-evaluated (IE this is subject to change if they start trying to reproduce books).

Stop comparing AI to a person. It’s not a person, it doesn’t do the things a person does, and it doesn’t have the rights of a person.

And yes the laws are antiquated. We need new laws that will protect authors.

Finally, no, you can’t just throw out all other considerations because you think AI is useful.

AI access to information is dependent on the access humans have.

That doesn’t make it a person.

That’s not in anyways the issue here

A lot of people seem to think it is, constantly conflating the fact that they can read a book at the library with AI being able to do whatever it wants.

I’ve seen the comment " its not human" like 8 times. They’re the only example I see of someone incorrectly conflating things.

People are pointing out the issue with the right to access information freely. Like a library or private property. I am free to do what I want with it including feeding it to a machine I built to train it. Restricting that is wrong

And we have determined that AI created work cannot be copyrighted - because it’s not a person. Nobody’s trying to claim that AI somehow has the rights of a person.

But reading a bunch of books and then creating new material using the knowledge gained in those books is not copyright infringement and should be not treated as such. I can take Andy Warhol’s style and create as many advertisements as I want with it. He doesn’t own the style, nobody does.

Why should that be any different for a company using AI? Makes no sense to me.

You have been duped into thinking copyright is protecting authors when really copyright primarily exists to protect companies like Disney.

For clarity’s sake, the original intent behind copyright was definitely to protect authors and thereby foster creativity, but corporations like Disney have lobbied very successfully over the years to prevent original works from becoming public domain.

Meanwhile, in classic fashion, those same companies have taken public domain works and turned them into ludicrously successful IPs!

I argue that this is a positive aspect of capitalism that our governments have unduly suppressed in favor of corporate sponsors (further solidified by an increasing legal allowance of such sponsorships), and that we should return to a more reasonable timeframe for full exclusivity.

Well copyright certainly isn’t protecting authors if big corporations can use their works without paying for them. That’s the whole point.

It is well established in the law that you cannot copyright ideas and actors are allowed to amalgamate different pieces of copyrighted materials to create something new and that isn’t covered under copyright.

Think of it this way. Tarantino watched a lot of movies before he made Reservoir Dogs. The movie was inspired by Kansas City Confidential. He took a bunch of styles and inspirations from various different movies he had seen, put them together in a new way, and released the movie. This is the way creative work happens. Nothing is from scratch. Everything is built on everything else. The only difference I see is that this process is being done automatically by pattern-finding algorithms.

Sure, but then it’s only even more fair for these companies to pay up during the training of an AI. Schools don’t get to copy entire book transcripts off the Internet for lessons. They can’t pirate documentaries. And for higher education, the student pays tuition to learn information. They can pirate textbooks, but that isn’t enough alone to learn fields of studies.

If we’re going to use human analogies for AI, then it should be limited in the same ways. The companies have to buy any books or media, or use material that is explicitly in the public domain with respect to copyright law – you could post a transcript of it online in front of the most litigious lawyer, and nothing would happen.

Couple of things. There is no way to prove they are using “pirated content”. There are web scrapers that go through the internet and scrape everything. This is going to include discussion, articles, blog posts, and video transcripts of many people discussing copyrighted content. The AI can give you a reasonable analysis for a book without ever having read the book because of this.

Everything online is public. You cannot force someone to pay for seeing your reddit post. Second, I bet they have large databases of all the books in the public domain. This includes a very large corpus of text.

This alone is probably enough to train their AI. Beyond that, presumably, they could pay for books. Textbooks, fiction, biographies, etc. They could pay for these and pump them into the system.

If I were them, personally, I would probably just find large torrents with all the books. Or write some automated script to pull from libgen. But there’s no real way of proving how they did this and there’s no real way of proving what content the AI was trained on.

It would create incredible legal liability for the company. If the authors and publishing studios caught wind of that, the AI companies would be sued into oblivion. Think about how intense media companies get about pirating when it’s just for pleasure or entertainment – if you’re using it to turn a profit, you’re legally fucked.

Having no chain of custody for knowing what the AI was trained on sounds like typical cost cutting, until you realize this means they can’t detect or identify another AI’s output. They’ll quickly become garbage model.

Well obviously it’s a massive legal liability. However… seemingly legitimate serious companies with large legal departments have been known to do legally dangerous things before. Apple deliberately sabotaged old iPhones by sending updates to drain battery - encouraging people to get new phones. Volkswagen faked their emissions tests (if I remember correctly, people went to prison and Apple had to pay out fines). I don’t put it past OpenAI to be doing illegal things for short term benefit to their long term detriment.

Not saying it’s happening, but I’m saying it’s possible and it’s hard for me or you to prove that it’s happening.

I’m sure they have good internal controls for what goes in the model. I’m guessing the information is very tightly controlled, for above reasons. I’m not sure what you mean by another AI’s output though.

I’m not sure about that at all. At what point does a computer program become intelligent enough to not have human rights but have some cognition of fair use.

I think it needs to be really hashed out by someone who understands both copyright law and data warehouses, and some programming. It’s a sparse field for sure but we need someone equipped for it.

Because I don’t think it’s as linear as you’re describing it.

deleted by creator

Spoken like someone who never reads.

People see that they were purchased for 10 billion dollars and want a piece of the pie.

Lawyers getting paid regardless and are willing to yet again fuck regular folk and strip us of more things. Internet was so much more fun before they showed up and started suing everybody and issuing DMCA take downs

yes, this is the fundamental point

deleted by creator