See THIS POST

Notice- the 2,000 upvotes?

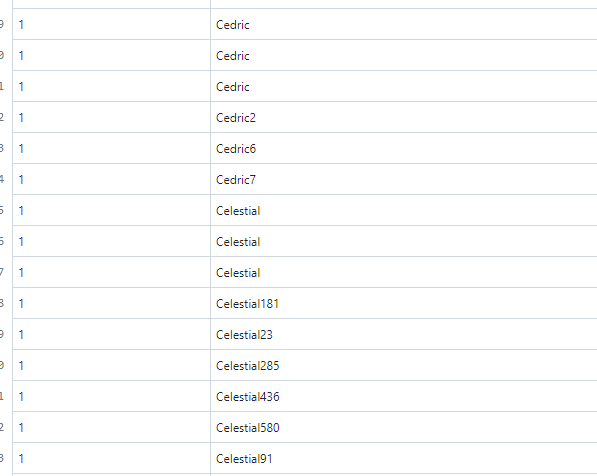

https://gist.github.com/XtremeOwnageDotCom/19422927a5225228c53517652847a76b

It’s mostly bot traffic.

Important Note

The OP of that post did admit, to purposely using bots for that demonstration.

I am not making this post, specifically for that post. Rather- we need to collectively organize, and find a method.

Defederation is a nuke from orbit approach, which WILL cause more harm then good, over the long run.

Having admins proactively monitor their content and communities helps- as does enabling new user approvals, captchas, email verification, etc. But, this does not solve the problem.

The REAL problem

But, the real problem- The fediverse is so open, there is NOTHING stopping dedicated bot owners and spammers from…

- Creating new instances for hosting bots, and then federating with other servers. (Everything can be fully automated to completely spin up a new instance, in UNDER 15 seconds)

- Hiring kids in africa and india to create accounts for 2 cents an hour. NEWS POST 1 POST TWO

- Lemmy is EXTREMELY trusting. For example, go look at the stats for my instance online… (lemmyonline.com) I can assure you, I don’t have 30k users and 1.2 million comments.

- There is no built-in “real-time” methods for admins via the UI to identify suspicious activity from their users, I am only able to fetch this data directly from the database. I don’t think it is even exposed through the rest api.

What can happen if we don’t identify a solution.

We know meta wants to infiltrate the fediverse. We know reddits wants the fediverse to fail.

If, a single user, with limited technical resources can manipulate that content, as was proven above-

What is going to happen when big-corpo wants to swing their fist around?

Edits

- Removed most of the images containing instances. Some of those issues have already been taken care of. As well, I don’t want to distract from the ACTUAL problem.

- Cleaned up post.

I agree. This is why I started the Fediseer which makes it easy for any instance to be marked as safe through human review. If people cooperate on this, we can add all good instances, no matter how small, while spammers won’t be able to easily spin up new instances and just spam.

What- is the method for myself and others to contribute to it, and leverage it?

First we need to populate it. Once we have a few good people who are guaranteeing for new instances regularly, we can extend it to most known good servers and create a “request for guarantee” pipeline. The instance admins can then leverage it by either using it as a straight whitelist, or more lightly by monitoring traffic coming from non-guaranteed instances more closely.

The fediseer just provides a list of guaranteed servers. It’s open ended after that so I’m sure we can find a proper use for this that doesn’t disrupt federation too much.

So- the TLDR;

Essentially a few handfuls of trusted individual voting for the authenticity of instances?

I like the idea.

When it’s worded this way, replace ‘trusted individual’ with ‘reddit admin’.

Isn’t it similar to putting a select group in charge? How is it different?

Well- because instance owners have full control over what they want to do with the data too.

It’s not forced. It’s just- a directory of instances, which were vetted by others.

Actually, not a handful. Everyone can vouch for others, so long as someone else has vouched for them

One recommendation- how do we prevent it from being potentially brigaded?

Someone vouches for a bad actor, bad actor vouches for more bad actors- then they can circle jerk their own reputation up.

Edit-

Also, what prevents actors in “downvoting” instances hosting content they just don’t like?

ie- yesterday, half of lemmy was wanting to defederate sh.itjust.works due to a community called “the_donald”, containing a single troll shit-posting. (The admins have since banned, and remove that problem)- but, still, everyone’s knee-jerk reaction was to just defederate. Nuke from orbit.

There’s a chain of trust. If a bad actors lets in all their friend, withdrawing the guarantee from that bad actors, withdraws it from all their friends.

There’s no “downvote”, but even if I add it, I would add filter so you can ignore “downvotes” from people you don’t agree with, or only see “downvotes” from instances you agree with.

I dig the idea. Let me know when we have a good method for getting it setup, and a reasonable GUI for viewing/managing the data.

I can help build tools if needed, but, anything I would build would more than likely be in .net.

What do you mean? It’s already setup.

About the gui, I’m not a frontend engineer. Hopefully someone can make onr

For contributing, it’s open source so if you have ideas for further automation I’m all ears.