Tech guy invents the concept of giving instructions

With clear requirements and outcome expected

Why did no one think of this before

Who does that? What if they do everything right and it doesn’t work and then it turns out it’s my fault?

It would be nice if it was possible to describe perfectly what a program is supposed to do.

Someone should invent some kind of database of syntax, like a… code

But it would need to be reliable with a syntax, like some kind of grammar.

That’s great, but then how do we know that the grammar matches what we want to do - with some sort of test?

How to we know what to test? Maybe with some kind of specification?

People could give things a name and write down what type of thing it is.

A codegrammar?

We don’t want anything amateur. It has to be a professional codegrammar.

What, like some kind of design requirements?

Heresy!

Design requirements are too ambiguous.

Design requirements are what it should do, not how it does it.

That’s why you must negotiate or clarify what is being asked. Once it has been accepted, it is not ambiguous anymore as long as you respect it.

I’m a systems analyst, or in agile terminology “a designer” as I’m responsible for “design artifacts”

Our designs are usually unambiguous

What did you said?

I think our man meant in terms of real-world situations

And NOT yet another front page written in ReactJS.

Oh, well, that’s good, because I have a ton of people who work with Angular and not React.

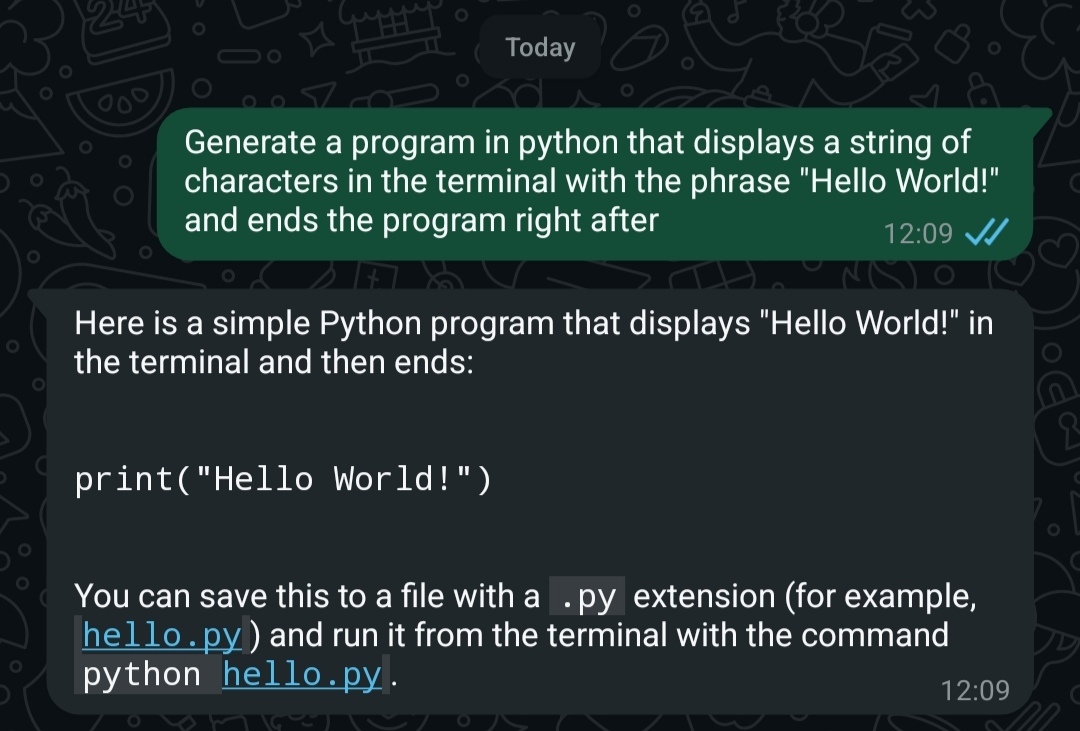

This still isn’t specific enough to specify exactly what the computer will do. There are an infinite number of python programs that could print Hello World in the terminal.

I knew it, i should’ve asked for assembly

Yeah but that’s a lot of writing. Much less effort to get the plagiarism machine to write it instead.

Ha

None of us would have jobs

I think the joke is that that is literally what coding, is.

Who even makes these comics? Is it like Simpsons

Randall Munroe. You may know him from such gems as xkcd 3472 and 6548.

Getting a bit ahead of yourself, we’re only on 3070 so far!

This is weird, it’s just directing me to the 404th comic? https://www.explainxkcd.com/wiki/index.php/404:_Not_Found

You have be more patient, those ones will take a while to load.

Web browsing 101: if you see a hyperlink on social media, you can click on it and then look around to see if it contains more links with useful information, often in the header or footer of the page. Here I found one for you: https://xkcd.com/about/

Human communication 101: sometimes humans ask a question without expecting an answer, it’s called a rhetorical question

Sorry, I assumed this was a place of discussion and conversation. You can either be helpful or don’t, it’s generally considered a dick move to taunt while being helpful.

OP just chatting with themselves so they can screenshot it?

That is some telegram group and both messages shows from left with profile icons(which got cropped). The screenshot person sent the last message which shows double ticks

In the desktop client the positions of bubbles also depend on the width of the window.

Great attention to detail!

That’s just a fake conversation in general, look at the timestamps between the messages from the interlocutor. Several minutes to type a complete sentence?

Hey, i can take a few hours to reply sometimes :c

Could be a group chat but we all know they’re a twat

I wrote a shell script like this (it admin , notna dev) for private use.

The prompt took me like 5 hours of rewriting the instructions.

Don’t even know yet if it works (lol)Neural network: for when saying LLM doesn’t sound smart enough

It’s just what it was called in the nineties.

LLMs are a type of neural networks.

Calling GPT a neural network is pretty generous. It’s more like a markov chain

it legitimately is a neutral network, I’m not sure what you’re trying to say here. https://en.wikipedia.org/wiki/Generative_pre-trained_transformer

You’re right, my bad.

I’ve played with markov chains. They don’t create serious results, ever. ChatGPT is right just often enough for people to think it’s right all the time.

The core language model isn’t a nueral network? I agree that the full application is more Markov chainy but I had no idea the LLM wasn’t.

Now I’m wondering if there are any models that are actual neutral networks

I’m not an expert. I’d just expect a neural network to follow the core principle of self-improvement. GPT is fundamentally unable to do this. The way it “learns” is closer to the same tech behind predictive text in your phone.

It’s the reason why it can’t understand why telling you to put glue on pizza is a bad idea.

the main thing is that the system end-users interact with is static. it’s a snapshot of all the weights of the “neurons” at a particular point in the training process. you can keep training from that snapshot for every conversation, but nobody does that live because the result wouldn’t be useful. it needs to be cleaned up first. so it learns nothing from you, but it could.

“Improvement” is an open ended term. Would having longer or shorter toes be beneficial? Depends on the evolutionary environment.

ChatGPT does have a feedback loop. Every prompt you give it affects its internal state. That’s why it won’t give you the same response next time you give the same prompt. Will it be better or worse? Depends on what you want.